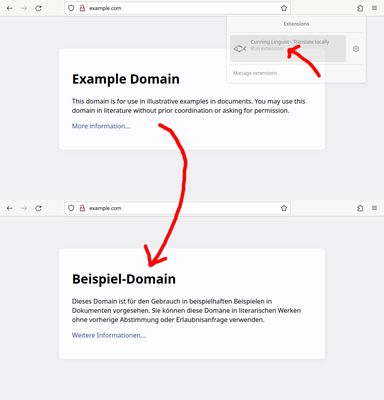

Cunning Linguist - Translate locally 作者: Gerold Meisinger

Translate any website using a local Ollama LLM server

5 个用户5 个用户

扩展元数据

屏幕截图

关于此扩展

Translate any website locally by sending each (selected) text node of a website one-by-one to a local Ollama server to translate it with a large language model (LLM) artifical intelligence and replace it inline.

Requires a Ollama installation and a GPU with at least 8GB VRAM!

Install Ollama server (MIT)!

On the first start this will download a huge model file of about 5GB (see Meta's Llama3.1-8b-instruct-Q4_0 model).

To translate just click the extension button "Cunning Linguist (fish icon)" to translate the whole website or the selected text. On the first usage this could take a few minutes for download, a few seconds to load the whole model from disk to RAM but the text should translate one by one in a second.

Required permissions:

Requires a Ollama installation and a GPU with at least 8GB VRAM!

Install Ollama server (MIT)!

On the first start this will download a huge model file of about 5GB (see Meta's Llama3.1-8b-instruct-Q4_0 model).

To translate just click the extension button "Cunning Linguist (fish icon)" to translate the whole website or the selected text. On the first usage this could take a few minutes for download, a few seconds to load the whole model from disk to RAM but the text should translate one by one in a second.

Required permissions:

- 127.0.0.1/localhost: send API requests to your local Ollama server. No data will be transferred outside of your computer!

- activeTab: change text in tabs

- storage: save settings

评分 0(1 位用户)

权限与数据

更多信息